Israel Defense Force’s account, which has been exceptionally active since Hamas attacked Israeli forces and people on 7 October, shared a text post on 23 October, and said, “This would have been a photo of a lifeless pregnant woman next to her beheaded unborn baby cut out of her belly by Hamas terrorists. Due to this platform’s guidelines, we can’t show you that.”

While on one hand, social media is flooded with graphic images coming from war-torn Gaza, some social media users seem to be taking advantage of platforms' 'policies' to spread unverified claims around the war.

The claim about Hamas having beheaded babies was later contradicted by an Israeli official, who told CNN that while there were cases of Hamas militants “carrying out beheadings and other ISIS-style atrocities,” Israel could not confirm if the bodies belonged to “men or women, soldiers or civilians, adults or children.”

Closer home, TV9 Network’s executive editor Aditya Raj Kaul shared an X post about a similar incident, which also spoke about a foetus being removed from a mother’s body and called Hamas “inhuman savages.”

Responding to a user who asked for evidence to back this disturbing claim, Kaul responded saying that his friend had shared photos from the ground and that he “can’t post it.” Calling him out for not having evidence, other users called out Kaul for “lies.”

Shortly after this, X’s Community Note appeared under Kaul’s post, stating that the incident in his post referred to a 1982 incident in Lebanon, when Israeli forces had massacred Palestinians at the Sabra and Shatila refugee camps in Beirut.

This claim was also debunked by fact-checkers.

But X’s sensitive media policy has not stopped the flow of graphic or violent content. At the time of writing this article, a video showing a medical personnel holding up the remains of an infant, purportedly a casualty after one of Israel’s bombings in Gaza, is still up and being shared.

There are many examples of such content, which anyone can access with simple keywords. So, is X selective in its approach of content moderation? Nope.

Two Wars in Two Years, But the Problems Remain The Same

On 24 February 2022, Russia announced a full-scale, military invasion of Ukraine. This announcement sent social media and news organisations into a frenzy, who had a hard time sorting fact from fake. So, disinformation in the form of Ukraine’s Ghost of Kyiv and a wide variety of misinformation tailored for every view and perspective, flooded the internet.

Did we learn to be careful from this? Not really.

Hamas' surprise attack against Israeli people and forces came around 18 months after the Russian invasion, which has now snowballed into a wide-scale conflict which is now in its third week.

The major difference this time? It’s now even more difficult for people to identify and explore information from credible sources, as seen with X’s (formerly Twitter) blue-check subscription plan.

X and the Hurdle of Graphic Content

For 900 rupees (or 8 USD) a month, any user can enjoy a ‘verified’ mark next to their profiles, share longer posts, enjoy a boost in reach and several other advantages that non-paying users – no matter who they are – do not.

Initially aiming to democratise the platform, Musk’s X has festered an ideal playground for misinformation. Here, any post by a paying user, regardless of their credibility, can easily reach far more people than solid sources of information – such as activists or journalists.

This, in turn, has made it easier for people to spread graphic, horrific and most importantly, unverified visuals and information amid the Israel-Hamas conflict.

Since the conflict began, social media users have shared news and information related to the war in real-time. Alarming visuals and shocking news have reached people across platforms. Amid this mass transfer of media and information, the need to share verified information has shown its importance.

From old and unrelated visuals to hateful, damaging narratives, social media platforms have hosted a range of misinformation these past few weeks. Much of this has come from X’s premium subscribed accounts. This is happening despite the platform’s guidelines not allowing people to share graphic content.

X’s Approach to Tackle This Deluge of Misinformation

X’s content policy has remained largely unchanged in the wake of the conflict. (An attempt to learn about relevant changes over email was met with a “Busy now, please check back later.” response.)

According to the company’s blog post dated 24 October, X’s Community Notes has been working harder than ever.

Since 10 October, “notes have been seen well over 100 million times, addressing an enormous range of topics from out-of-context videos to AI-generated media, to claims about specific events,” the platform said.

Notes have led to fewer interactions with “potentially misleading content,” said X, with some authors choosing to “delete their posts after a note is added.”

While X’s policies say that it does not allow graphic content depicting “death, violence, medical procedures, or serious physical injury in graphic detail,” we came across several posts with visuals that fit this criteria perfectly.

The graphic visuals that are being shared – whether they are ‘fake’ or not – result in confusion and fuel hatred on social media, said Dina Sadek, a research fellow at the Digital Forensic Research Lab, to NPR.

Some of these visuals being shared might never get verified, either for lack of information in the visuals, or the inaccessibility of a conflict zone and the parties involved.

Meta: Two Platforms, One Solution?

Meta, on the other hand, has disallowed users from posting content carrying “praise for Hamas” and “violent or graphic content,” in a blog post dated 18 October, which highlights Meta’s efforts to curb hate and misinformation related to the Israel-Hamas war.

The post read that responding to the crisis, Meta “quickly established a special operations center staffed with experts,” which includes people fluent in Hebrew and Arabic, who could “closely monitor and respond to this rapidly evolving situation in real time,” allowing for faster takedowns of content which violated their Community Standards or Community Guidelines.

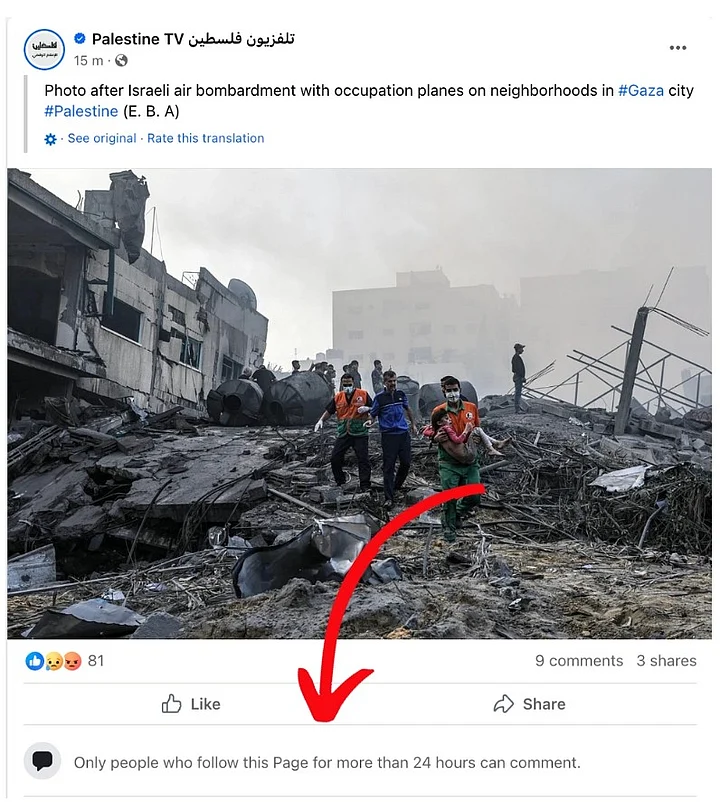

On Facebook too, the IDF shared the same post about not being able to show graphic content due to the platform’s policy.

However, we found several instances of graphic visuals being shared on Facebook, which was hidden by an overlay warning viewers about violent or graphic content, indicating that users were able to share such content.

(Note: Swipe to view both screenshots.)

Many Instagram users, especially activists and journalists, complained of having limited reach and views on their pro-Palestine posts, calling it a ‘shadow ban’ by Instagram.

This not only hinders the dissemination of news from credible sources, but also prevents fair messaging from reaching wide audiences during a time of algorithmically-curated social media feeds.

Calling it a “bug," Meta said that the error had been fixed.

While going through posts shared by pages on either side of the conflict, such as the IDF’s page and the Facebook pages for news organisation Alarabiya Palestine and Palestine TV, we saw that people who had not followed these pages for more than 24 hours could not leave comments.

(Note: Swipe to view screenshots.)

This, Meta said, was one of their “steps to reduce the visibility of potentially offensive comments under posts on Facebook and Instagram.”

Additionally, the platform stated that they had expanded their Violence and Incitement policy. Meta said that it would take down content identifying people “kidnapped by Hamas” so as to prioritise their safety, but would still allow content with blurred images. However, they would still take down content if they were “unsure or unable to make a clear assessment.”

This policy did not appear to be strictly implemented, or very effective.

While these posts cannot be verified for their content, we came across many photos and videos that clearly shared identifying information, including names, faces, ages and so on, of people allegedly kidnapped by Hamas.

Can platforms be held completely responsible for bad actors? No. But whenever widely used and consumed social media platforms fall short on fulfilling their basic duty, it leads to a mess.

Nearly eight months after Musk acquired X, the company’s Trust and Safety head, Ella Irwin, resigned from X in July 2023, Reuters reported.This came after the platform drew heavy criticism for its lax content moderation policies, as the council had been dissolved in December 2022, as per NPR.

Speaking to NBC after the onset of the ongoing Israel-Hamas conflict, Irwin said that her time at X was the “hardest experience” of her life, and she quit due to differences in “non negotiable principles.”

Today, X’s community notes programme is overwhelmed, NBC found. Even known misinformation went unaddressed and fact-checks have been delayed on the platform.

Kim Picazio, a Community Notes volunteer, wrote about her experience on Threads, saying that despite working hard all weekend to approve notes on “hundreds of posts which were demonstrably fake news”, it took at least two days for those notes to appear under posts.

What CAN We Do?

When people affiliated with platforms are struggling to keep up with the volume of misinformation, what can a fact-checker do when claims aren’t backed with evidence?

Ignoring posts which make serious claims may cause a dangerous narrative to grow further, but “fact-checking” without having visuals or concrete evidence to verify details is an exercise in futility.

One way out of this would be to ask those sharing claims and citing platform guidelines as reasons to not share visuals, to send this media privately.

Whether via personal messages, web hosting, or uploading it on websites such as Dropbox or Google Drive is one way to begin attempting to verify gruesome claims.

However, the people sharing this content may not cooperate. In such cases, when platforms are overwhelmed and fact-checkers, helpless, media literacy emerges as the true hero.

During high-tension and breaking news situations, viewers’ and readers’ ability to take everything they see with a grain of salt and pausing to think before forwarding content is one of the most important ways to help stop the flow of dis- and misinformation on the internet.

(Not convinced of a post or information you came across online and want it verified? Send us the details on WhatsApp at 9643651818, or e-mail it to us at webqoof@thequint.com and we'll fact-check it for you. You can also read all our fact-checked stories here.)